Case-studies

Grindr's Chat Architecture: Scaling to Billions of Messages

Posted on January 1, 1 (Last modified on May 18, 2025) • 10 min read • 2,113 wordsDeconstructing Grindr's Chat Architecture: A Symphony of Services at Scale

Deconstructing Grindr’s Chat Architecture: A Symphony of Services at Scale

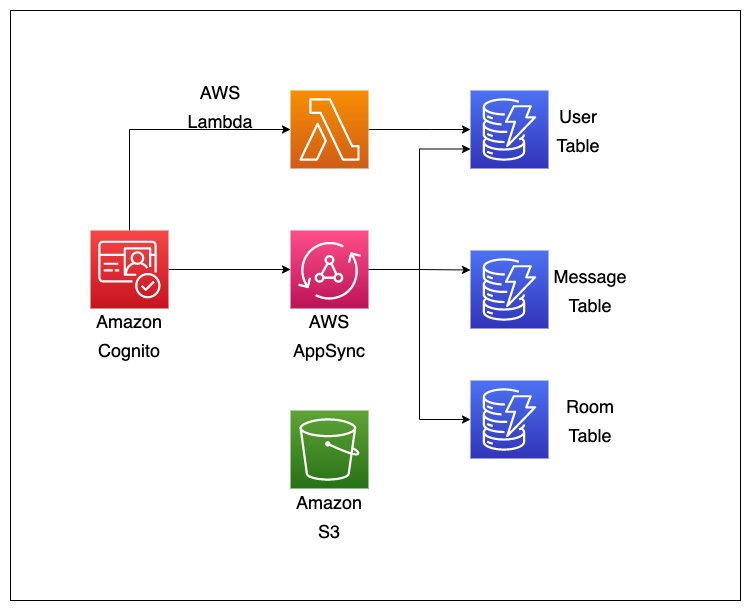

Building a chat system that serves millions of users and processes billions of messages is no small feat. Grindr, a platform that facilitated an astounding 121 billion messages last year, offers a compelling case study in designing for massive scale, resilience, and real-time responsiveness. The architecture underpinning this system is a carefully orchestrated ensemble of modern cloud services and design patterns, particularly leveraging the AWS ecosystem. What’s intriguing is not just the choice of individual technologies, but how they interoperate to solve specific challenges inherent in a large-scale social messaging application.

This exploration, inspired by a recent overview of their system, aims to dissect the architectural choices, the trade-offs involved, and the principles that allow Grindr’s chat to function effectively. For architects and technology professionals, understanding such real-world implementations provides invaluable insights into building robust, scalable distributed systems.

The User’s Message: A Journey Through the System

Before diving into the architectural deep end, let’s trace the path of a single message. When a user hits “send,” the message embarks on a multi-stage journey:

- Websocket Layer - The Front Door: The message first arrives at the websocket layer. This is the initial point of contact, responsible for basic but crucial validations: Is the user authenticated? Is the recipient blocking the sender?. This immediate feedback loop is vital for user experience.

- EKS - The Orchestration Brain: Once past the initial checks, the message (or rather, the request to process it) hits Amazon Elastic Kubernetes Service (EKS). EKS serves as the central nervous system, hosting a microservice-based architecture. It’s here that the system decides which specialized microservices need to be invoked.

- Chat Metadata Service & Persistence: A key player orchestrated by EKS is the chat metadata service. This service performs further business logic validation and, critically, persists the message.

This initial flow highlights a common pattern in modern distributed systems: a reactive frontend (websockets), a robust orchestration layer (EKS), and specialized microservices for distinct functionalities.

The Dual-Database Strategy: Tailoring Persistence to Data Characteristics

Perhaps one of the most interesting aspects of Grindr’s chat architecture is its data persistence strategy, which employs a combination of Amazon DynamoDB and Amazon Aurora PostgreSQL. This isn’t an arbitrary choice; it’s a pragmatic decision rooted in the different characteristics and access patterns of the data they handle.

- DynamoDB for Message Content: The actual content of the chat messages is stored in DynamoDB. The rationale is clear: scalability for write-heavy, rapidly growing data. Chat messages are immutable once sent, and their volume is immense. DynamoDB, a NoSQL key-value and document database, excels at ingesting and retrieving vast amounts of data with predictable low latency, making it an ideal candidate for this kind of workload. Its ability to scale horizontally without significant operational overhead is a massive win for a system handling billions of messages.

- Aurora PostgreSQL for Metadata: Conversely, message metadata—information like headers, “envelopes,” and crucially, the user’s inbox view (which messages are unread, who sent the last message in a conversation, etc.)—is stored in Aurora PostgreSQL. This data is characterized by frequent updates and read-heavy access patterns. An inbox is a dynamic entity; its state changes with every new message received or read. Relational databases like PostgreSQL, especially a managed and optimized version like Aurora, are well-suited for handling complex relational data, transactional consistency, and the kind of structured queries needed to reconstruct an inbox view. Aurora’s ability to handle high read loads and offer better consistency guarantees for this type of mutable, relational data makes it a strong choice here.

This dual-database approach is a classic example of polyglot persistence – using the right database for the right job. While it introduces complexity in managing two different database systems, the performance and scalability benefits derived from optimizing for specific data types and access patterns often outweigh this overhead, especially at Grindr’s scale. The trade-off is clear: operational simplicity of a single database system versus the performance and scalability gains of specialized systems. Grindr has opted for the latter, a common decision for high-throughput applications.

Speeding Up the Inbox: The Role of ElastiCache (Redis)

A responsive inbox is critical for user experience in any chat application. Constantly querying the primary database (Aurora PostgreSQL in this case) for inbox information for every active user would place an enormous strain on it. To address this, Grindr employs Amazon ElastiCache, specifically using Redis, as a caching layer.

The strategy is straightforward:

- When a user connects, the application first checks Redis for their inbox data.

- If the inbox is present in the cache (a cache hit), it’s served immediately, providing a fast load time and avoiding a database query.

- If the inbox isn’t in Redis (a cache miss), perhaps because the user hasn’t been online for a while, the system fetches the complete inbox data from Aurora PostgreSQL.

- This freshly retrieved data is then used to populate the Redis cache.

- From that point forward, as new messages arrive or messages are read, the Redis cache is kept up-to-date, ensuring that subsequent inbox views are served quickly from the cache as long as the user remains online.

While the video doesn’t explicitly detail the cache invalidation or update strategy (e.g., write-through, write-around, or how “kept up to date” precisely works), a common approach is to update the cache whenever the primary data store (Aurora) changes. This could involve the application logic explicitly updating Redis after writing to PostgreSQL, or potentially using triggers or change data capture (CDC) mechanisms if available and appropriate. The Time-To-Live (TTL) for cached data is also an important consideration, though not specified; it would likely be tuned based on user activity patterns. The goal is to balance cache freshness with cache hit ratios and database load.

Reaching Users: Online via Websockets, Offline via Push Notifications

Once a message is persisted and the sender’s inbox is updated, the system needs to deliver it to the recipient. This involves determining the recipient’s current online status:

- Online Users: If the recipient is currently active and has an open websocket connection, the message is delivered directly and immediately over this persistent connection. This provides the real-time experience users expect.

- Offline Users: If the recipient is offline, a direct websocket delivery isn’t possible. In this scenario, Grindr leverages Amazon Pinpoint to send a push notification to the user’s device. Pinpoint is a multi-channel messaging service, and using it for push notifications offloads the complexity of managing connections with Apple’s APNS and Google’s FCM.

Accurately tracking online/offline status at scale is a non-trivial challenge. Typically, it involves a combination of heartbeats over the websocket connection and presence services that maintain the state of connected users. When a websocket connection drops or a heartbeat is missed, the user can be marked as offline.

Security: Protecting User Conversations

Given the personal nature of chat conversations, security is paramount. Grindr implements several measures:

- Application-Level Encryption with KMS: All message content stored in DynamoDB is fully encrypted. Crucially, this encryption happens at the application layer, meaning the chat metadata service encrypts the message before sending it to DynamoDB. The encryption keys themselves are managed by Amazon Key Management Service (KMS). This approach ensures that the data is encrypted in transit to DynamoDB and at rest within DynamoDB, with Grindr controlling the encryption process via their application and KMS.

- Asynchronous Anti-Spam Measures via Kafka: To combat spam and other forms of abuse, metadata events related to messages are sent to an Apache Kafka cluster. Consumers of these Kafka topics then perform asynchronous operations, including running messages through anti-spam filters and other protective analytics. This decouples potentially complex and time-consuming spam detection logic from the primary message delivery path, ensuring that message sending remains fast.

Kafka: The Backbone for Asynchronous Operations and System Evolution

The use of Apache Kafka for asynchronous processing is another significant architectural choice. Every time a message is sent, a metadata event is published to Kafka. This event stream is then consumed by various downstream services for tasks such as:

- Anti-spam and content moderation.

- Tracking user connections (though specific details on how Kafka aids this weren’t provided in the accessible summary).

- Potentially, analytics, metrics, and other features that can benefit from a real-time stream of chat activity.

The decision to use Kafka over alternatives like RabbitMQ was driven by several factors:

- Ease of Adding New Consumers: Kafka’s publish-subscribe model allows new consumer applications to be added to process the event stream without impacting existing producers or consumers. This is invaluable for evolving the system and adding new features (e.g., new types of spam detection rules, analytics) without re-architecting the core message flow. Producers don’t need to be aware of new consumers.

- Message Replayability: Kafka retains messages in its topics for a configurable period. This allows services to “replay” messages if needed, for instance, to reprocess historical data with new logic or to recover from a consumer failure. This is a powerful feature for resilience and data processing flexibility.

Kafka acts as a durable, scalable buffer and a central point for decoupling various parts of the system. This decoupling enhances resilience (if a downstream consumer fails, the main chat flow isn’t affected) and evolvability.

Designing for Scale and Resilience: An Integrated Approach

The Grindr chat system’s ability to handle 121 billion messages a year isn’t accidental; it’s the result of deliberate design choices across its components:

- Websockets: Handle the initial flood of connections and messages, performing basic validation. Scaling this layer often involves load balancing across a fleet of websocket servers and managing connection state efficiently.

- EKS: Provides the scalable and resilient platform for running the microservices that form the core chat logic. Kubernetes’ self-healing and auto-scaling capabilities are crucial here.

- Databases (DynamoDB & Aurora): As discussed, chosen for their specific strengths in handling different data types and access patterns at scale. Both are managed AWS services, reducing operational burden.

- ElastiCache (Redis): Provides an in-memory caching layer that significantly reduces read load on the primary databases and improves latency for end-users.

- Kafka: Decouples asynchronous tasks, allowing the core messaging pipeline to remain fast and enabling easy addition of new features and analytics. Its distributed nature provides inherent scalability and fault tolerance.

- Amazon Pinpoint: Offloads the complexity of delivering push notifications to various mobile platforms.

High availability and fault tolerance are addressed by leveraging the inherent capabilities of these AWS services (many of which offer multi-AZ deployments, auto-scaling, and managed backups) and by designing the application logic to be resilient. For example, the decoupling provided by Kafka means that if an anti-spam service goes down, messages can still be sent and received, and the anti-spam processing can catch up later.

While the video summary didn’t explicitly detail the evolutionary path to this architecture, it’s common for systems of this scale to undergo significant iteration. Initial designs might be simpler, and as bottlenecks are identified and new requirements emerge, the architecture evolves. The current state, with its clear separation of concerns, use of specialized data stores, and emphasis on asynchronous processing, reflects a mature system designed with lessons learned from operating at massive scale. The emphasis on managed services (EKS, DynamoDB, Aurora, ElastiCache, Pinpoint, KMS, Kafka often via Amazon MSK) indicates a strategy to focus engineering efforts on core business logic rather than on managing underlying infrastructure.

Reflections for the Architect

Grindr’s chat architecture offers several valuable lessons for anyone building large-scale, real-time communication systems:

- Specialize Your Data Stores: Don’t shy away from polyglot persistence if your data access patterns warrant it. The benefits in performance and scalability can be substantial.

- Cache Aggressively but Wisely: Caching is essential for read-heavy workloads and improving user-perceived performance. Understand your data lifecycle to implement effective caching strategies.

- Embrace Asynchronous Processing: Decouple non-critical path operations using message queues or event streams like Kafka. This improves responsiveness, resilience, and evolvability.

- Leverage Managed Services: Cloud providers offer powerful managed services that can handle much of the undifferentiated heavy lifting of running databases, caches, orchestrators, and message brokers. This allows teams to focus on features that differentiate their product.

- Design for Failure: In any distributed system, components will fail. Design with resilience in mind, using patterns like retries, circuit breakers, and ensuring that critical paths are isolated from less critical ones.

- Security is Not an Afterthought: Embed security practices like encryption and abuse detection deep within the architecture.

The journey to building and maintaining a system like Grindr’s chat is continuous. As user numbers grow, features evolve, and new technologies emerge, the architecture too must adapt. However, the foundational principles of scalability, resilience, appropriate data handling, and robust security evident in their current design provide a strong blueprint for sustained success.